Media Query Source: Part 28 - TechTarget (US digital magazine); Event streaming technologies a remedy for big data's onslaught

- TechTarget (US digital magazine)

- Data streaming hurdles & best practices

- Concepts challenging for traditional data pros

- Greenfield use cases relatively less complex

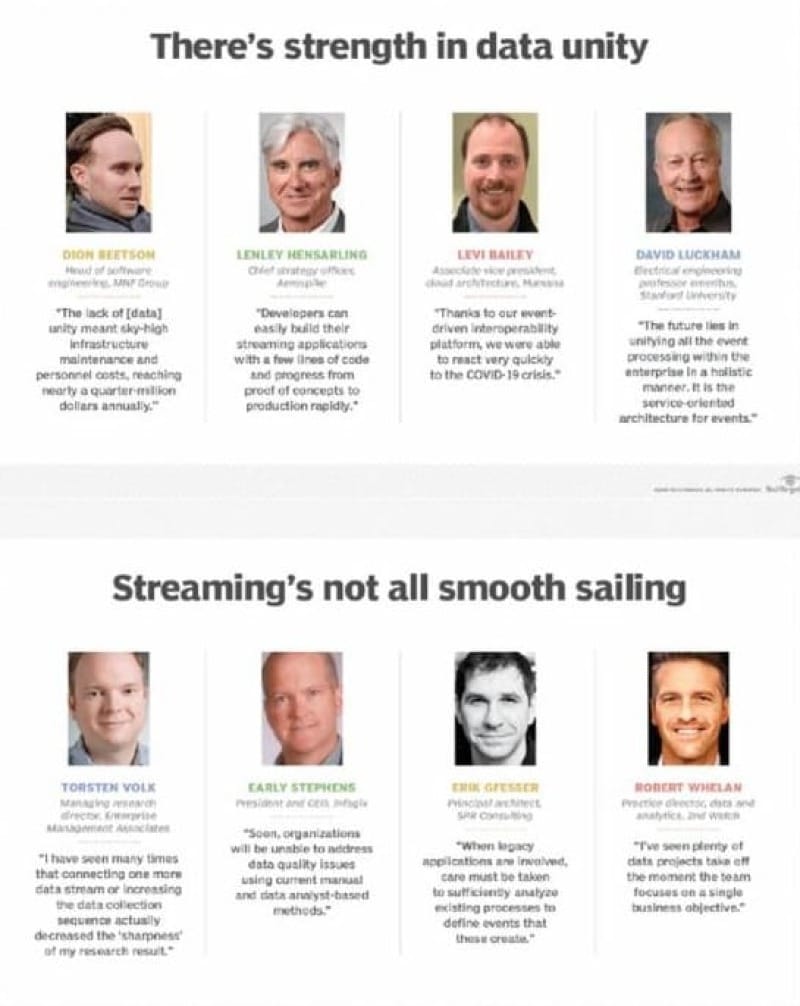

My responses ended up being included in an article at TechTarget (November 25, 2020). Extent of verbatim quote highlighted in orange, paraphrased quote highlighted in gray. Above image from cited article.

The responses I provided to a media outlet on October 7, 2020:

Media: I am working on a story looking at some of the hurdles organization run into when deploying big data streaming applications.

Media: In what significant ways have real time streaming platforms evolved over the last couple of years for providing the proper foundation for real-time analytics initiatives?

Gfesser: Recent real-time streaming products largely follow the same pattern, permitting ingestion of a high volume of data and the querying of this data via varying "windows" prior to being ingested by downstream processes, but there are a considerable number of competing products in this space, with commercial variations typically borrowing either open source code under the covers, or ideas brought about by open source projects.

A brief side note about "real-time" processing. The vast majority of such processing is actually "soft" real-time, typically intended to mean the opposite of traditional batch processing in which data is processed in bulk, typically on a scheduled basis. I have also developed "hard" real-time software that involved processing data within strict time constraints for activity taking place in physical space. Unlike hard real-time processing, the soft real-time processing that enterprises typically need is more graceful because one of the features it increasingly provides is the ability to replay past events. Because hard real-time processing typically reacts to physical events, losing opportunities to react is probable if not carried out in a timely manner. While soft real-time events such as actions taken by users in a web application can also require timely reaction, and advantages exist in preventing such loss, the downstream loss of an upsell opportunity is likely to be considered less detrimental than worker safety in a factory.

In addition to permitting ingestion of a high volume of data, the querying of this data via varying windows prior to being ingested by downstream processes, and the replaying of such events, real time streaming products also typically store data for some period of time in order that events can be revisited. The time that events can be stored varies greatly. For example, Amazon Kinesis Data Streams provides the option to store data for an extended period of time of 7 days rather than the default 24 hours, but Apache Kafka provides the option to retain data for an unlimited amount of time. However, retention of data is only one factor to consider of many, as costs to store and read data also need to be taken into account, as is choosing tooling most likely to attract technology talent.

In-between the extremes of batch and real-time processing is what is often referred to as mini-batch, and the community has discussed at length over the last several years what this means. Essentially, rather than processing individual events one at a time, such products process events in small batches. Because costs can sometimes be cost prohibitive for individual event processing, and timeliness of such processing may not be extremely critical, there can be benefits in making use of mini-batch. When considering batch versus real-time processing, I typically discuss in the context of scheduled versus unscheduled processing. Can processing wait? What advantages might arise when not waiting and vice versa?

Media: What are some of the top hurdles enterprises have to overcome when deploying and managing real time data streaming platforms? For each one, what are some of the best practices for addressing these?

Gfesser: The data processed by real-time streaming products is typically viewed as a stream of events. In the case of products such as Apache Kafka and Amazon Kinesis Data Streams, streams can be processed similarly to database tables, albeit the data in such tables is defined in the context of windows based on varying criteria. For example, a use case only considering data within a certain time constraint will define such tables differently than a use case that permits consideration of straggling events. Understanding these concepts can be challenging for some traditional data practitioners.

Additionally, the very concept of an "event" might take some development teams time to digest, especially with respect to how events might be defined for scenarios currently processed by legacy applications. And from the perspective of managing streams, greater capabilities and flexibility typically means more development. While the capabilities and flexibility that Amazon Kinesis Data Streams and Apache Kafka offer exceed that of Amazon Kinesis Data Firehose, for example, Firehose also requires significantly less management.

Note that deployment of a real-time streaming product in a greenfield scenario will be relatively less complex. When legacy applications are involved, care must be taken to sufficiently analyze existing processes to define events that these create. When defined effectively, a real-time streaming product can serve as the backbone of an entire enterprise ecosystem, providing a single source from which to draw data, especially for cases in which microservice architectures are being built. In such cases, it is prudent to tackle product selection early because it will serve as a central component. Soon afterward, make sure ongoing build efforts address ongoing management, as some teams only test with the easy path in mind and so do not end up being equipped for the longer term that often involves more data or activity.