Chicago Big Data: Analyzing Rat Brain Neuronal Signals with Hadoop and Hive (June 26, 2012)

Personal notes from the Chicago Big Data meeting this past week at Orbitz, led by Brad Rubin, Associate Professor at the University of St. Thomas.

- work discussed during this talk started at the University of St. Thomas Graduate Programs in Software

- the Center of Excellence for Big Data was launched 2 months ago

- an attempt was made to run data on MATLAB, but not enough storage, processing power etc was available to work with about 100TB of rat data

- the first thought was that Hadoop was used for click stream data etc

- patient "HM" was an individual whose hippocampus was removed, so he has been the subject of a lot of studies

- IQ apparently does not change following removal of the hippocampus – the IQ of patient "HM" stayed at 112

- however, what was also found is that while procedural memory is still available, declarative memory is not

- Rubin posed the scenario of being a rat in a maze who is at a T-junction for the first time, trying to decide whether to go left or right

- in such a scenario, the cerebellum becomes very active to determine what to do next

- the second time that the same T-junction is encountered, a rat shifts into autopilot

- neurons generate electrical signals that can be recorded

- tetrodes enables the ability to distinguish between these signals based on their spatial distribution

- neuroscientists can hone in on individual neurons to see what they are doing

- signals that can be obtained from tetrodes come in one of two forms or frequency bands: action potential "spikes" and local field potentials LFPs or EEG

- two different signals are heard when rats are running around a maze during an "open field foraging task"

- neuroscientists are interested in beta and low and high gamma waves to determine where a given rat is positioned based on whether the rat has encountered the location for the first time

- the original wave format is composed of these waves, which are separated for analyses

- convolution (frequency domain)

- continuous wavelet transform

- channel averaging

- event-triggered analysis: "subsetting"

- note that phase locking involved complicated calculations that were implemented in MATLAB but not carried over into the Hadoop solution

- fast gamma and slow gamma are on separate theta cycles – heat maps are used to make this determination

- while not optimal, Rubin used equipment that was available – a 24-node Hadoop cluster running on Ubuntu Server

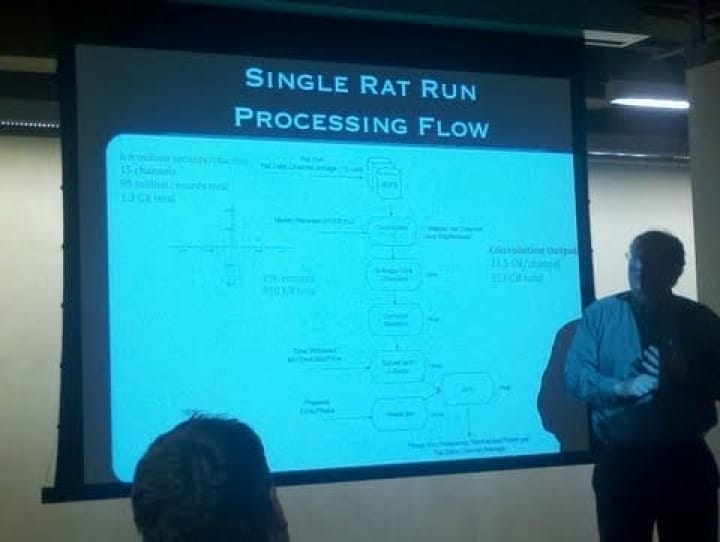

- team went through a number of iterations to determine single rat run processing flow

- a single rat running a maze for one hour generated 1.3GB of data – 6.6 million records/channel, 15 channels, 99 million records total

- convolution implemented with MapReduce job

- Hive was used for average data channels and computation of statistics

- one rat running a maze for an hour results in 353GB of convolution output

- Hadoop compute statistics percentage complete is calculated outside the close() method, proving interesting to this project because most of the calculations were performed within the close() method

- because of this, Hadoop would report 99% complete for hours

- used sequence files as input into Hive, due to size of text data

- tried to get away from using text due to the amount of data involved

- had to write code to enable this functionality

- used binary data format called SequenceFile

- needed to create custom Java SerDe (serailaizer/deserializer) for this complex value object consisting of an int, a short, and a float to map to three column: Writing a Simple Hive SerDe

- also used Snappy block-level compression

- note that this turned out to be an I/O intensive task rather than a compute intensive task

- Rubin noted that there are so many knobs to turn that they could have spent a year tuning Hadoop

- the average Java/Hadoop time per convolution was 37 seconds

- the average MATLAB time per convolution was 11 seconds

- an apples-to-apples comparison between Hadoop and MATLAB is difficult because of single workstation memory limitations for the MATLAB approach

- the two approaches also processed steps in a different order