BigDataCamp Chicago (April 21, 2012)

Personal notes from BigDataCamp Chicago attended yesterday at Morningstar. The first BigDataCamp Chicago, for which I registrered but could not attend, was held on November 19, 2011. This time around, the title for this event was amended to include "Open Data", to emphasize working with data publicly made available by the government to solve problems in the public sector.

This past week, I already had the chance to browse a number of data stores made available by the City of Chicago and other government entities, mainly because I was looking for big data sets with which to work while ramping up on R Language fundamentals, and so I decided not to attend the sessions specifically focusing on the "Open Data" topic.

It so happens that I found out about this unconference through a nonprofit consulting firm, MCIC, for which I had performed some pro bono consulting work a couple years ago. The company had sponsored a competition this past year to solve public sector problems with open data, and there were connections between that competition and the upcoming Big Data Week this year.

The MC for the opening segment was Jonathan Seidman, formerly with Orbitz and now with Cloudera (he noted that Cloudera is "hiring like crazy"). Orbitz has been a heavy user of Hadoop for the last couple years. Seidman noted that there are "a ton of applications" in this space. For example, application of Hadoop to the health sciences and government.

RelayHealth applied to the connectivity between providers and payers, which involves the transferring of large volumes of eligibility and claims data, and realized that Hadoop would help. They were required to archive 5 years of data, and wanted to expose inefficiencies in processing claims etc.

First Life Research has a website that merges health care with social media and big data, aggregating information from patients from real life experiences, analyzing "billions of discussions" to discover real world treatments.

Explorys integrates clinical, financial, and operational data to improve health outcomes and reduce medical costs. Hadoop forms the base of their "DataGrid" big data platform. Pacific Northwest National Laboratory uses tools such as Hadoop and HBase for bioinformatics.

USA Search, part of the general services administration for the U.S. government, is a hosted search service for usa.gov and over 500 other government websites. They started with MySQL but later turned to Hadoop and Hive to provide a low cost and scalable solution.

Ted Dunning, currently MapR Technologies Chief Application Architect, shared the opening address alongside Jonathan Seidman. Speaking to some slides to which I hope to obtain access in the near future (Ted sat by me during lunch and mentioned he would post in the near future), Ted mentioned that that big companies would be adopting big data technologies such as Hadoop if there were availability or cost concerns. But what ends up being a more common scenario is that backwards adoption is taking place.

What Ted is seeing is adoption by startups prior to large firms. While big data technologies are being applied simultaneously by both the small and large, the conventional answer in terms of whether to adopt these technologies is the amount of data involved. The old school experiences exponential scaling, whereas big data has linear scaling in terms of cost.

To get value, diminishing returns well always be experienced at the outset. Old school thinking tends to think that the cost curve is steep, but big data makes cost linear, driving down the cost coefficient. The cost curve tends to follow the value curve. Algorithms have to scale horizontally, on commodity hardware. "What can you do with it, and how? This is what we'll be discussing. We've got a revolution on our hands."

Following the philosophy of an unconference, a number of session ideas were proposed, and attendees (about 150) voted as to which should be turned into 4 rounds of sessions.

Proposals, with approximate number of votes for each according to MC:

- Hadoop cluster recommended hardware configuration (15)

- open data decision making and tradeoffs (12)

- deidentification of data (40)

- using social media analytics to make chicago a better place to live (50)

- big data visualization (60)

- open data for health care and quality (?)

- moving data practice from B2B space to customer space (10)

- Hadoop, HBase, MongoDB in the OLTP space (35)

- scaling from legacy data warehouse to Hadoop in agile manner (10)

- big data use cases (15)

- intro to R and working with big data (a lot)

- mega learning with Mahout (50)

- opening up 311 for web applications (?)

Following lunch, the schedule was as follows (emailed by the organizers). The sessions I attended are in bold. Note that since this was an unconference, the session focus did not necessarily stay on target. Attendees of each session provided the direction of each discussion.

1:30 pm

Auditorium #1 – Intro to Big Data & Use Cases

Training Room #2 – De-identification – Privacy for Public Data

Training Room #3 – Hardware Req'd for Hadoop Cluster

2:15 pm

Auditorium #1 – Intro to Hadoop & R & Big Data

Training Room #2 – Open Gov: Data for Civic Applications (ex: Healthcare)

Training Room #3 – Prepare your company for Big Data (BTC)

3:00 BREAK

3:15 pm

Auditorium #1 – Mega Learning w/ Mahout (esp K-NN)

Training Room #2 – Social Media Analytics

Training Room #3 – Scaling from Legacy Data Warehouse Vendors to Hadoop

4:00 pm

Auditorium #1 – HBase for Realtime

Training Room #2 – Big Data Visualizations< br />Training Room #3 – n/a

4:45 pm Wrap-up & BEER!!

Training Room #3 – Hardware Req'd for Hadoop Cluster

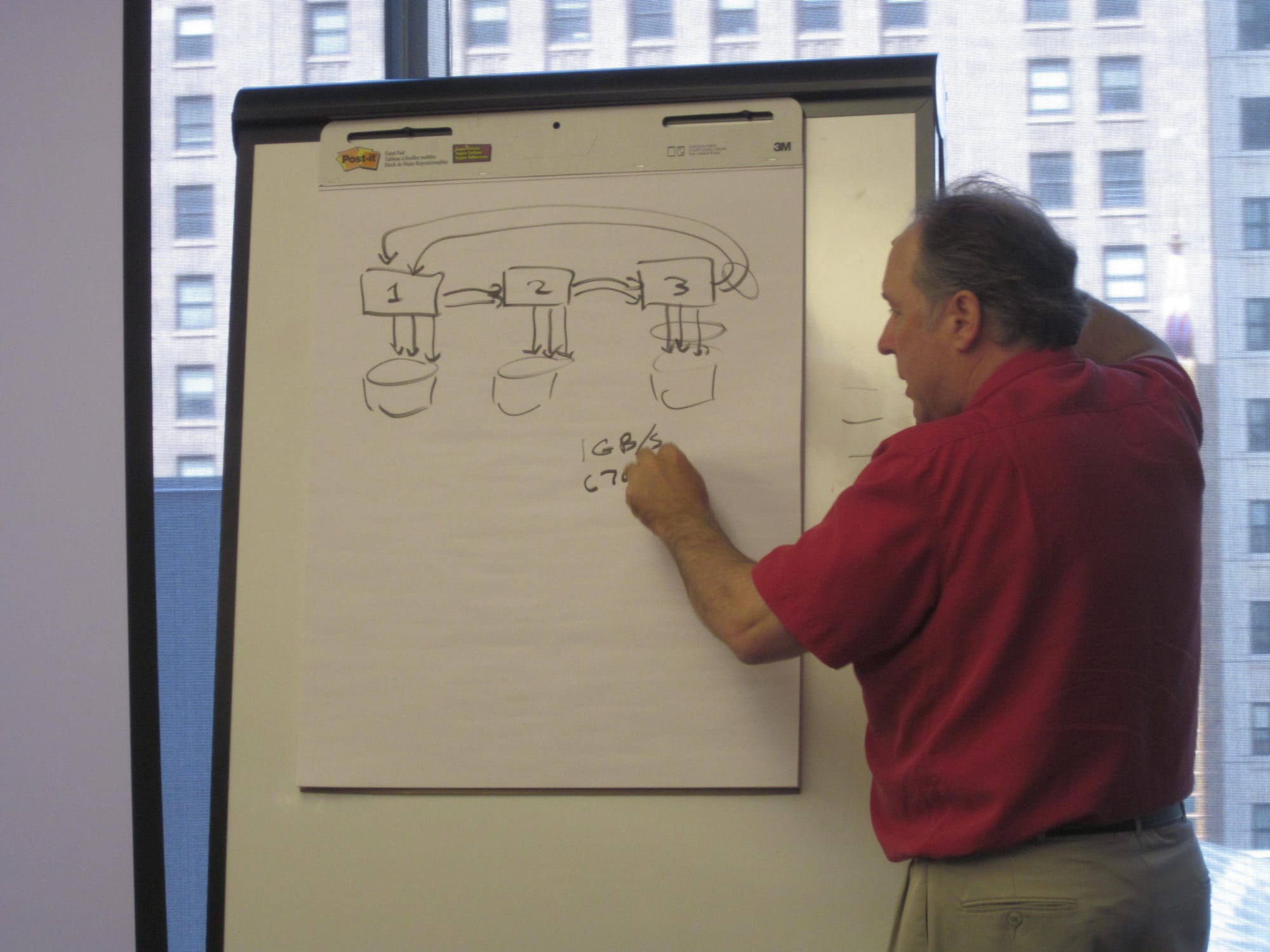

This session began by posing an example scenario in which 40tb of data exists in storage, to which 1tb of data is added each month. How is hardware selected to support this volume? Ted got up and mentioned that 10 times this workload is also very common, so we should really discuss as well.

The discussion then turned to on premise, private and public cloud storage. What these different types of implementations really mean. Power consumption etc. Ted mentioned that the cloud is probably not the route to go. The cloud is cheaper with regard to elasticity. So we focused on the data center.

Working through the rack units currently available in the marketplace, Ted explained that with 1U servers, most purchase SATA drives because these are the lowest cost. 7500rpm, 2tb. 1 or 2 CPUs. 1×6 cores, 2×6 cores. 4gb/core.

2U servers are the next level up. 12x2tb. 1 or 2 sockets. As a side note, Ted mentioned that RAID is typically not recommended in the big data space. 4U servers are 20x2tb or 36x2tb. One 4U server will be released on the market that has 80x3tb (240tb) drives in one chassy.

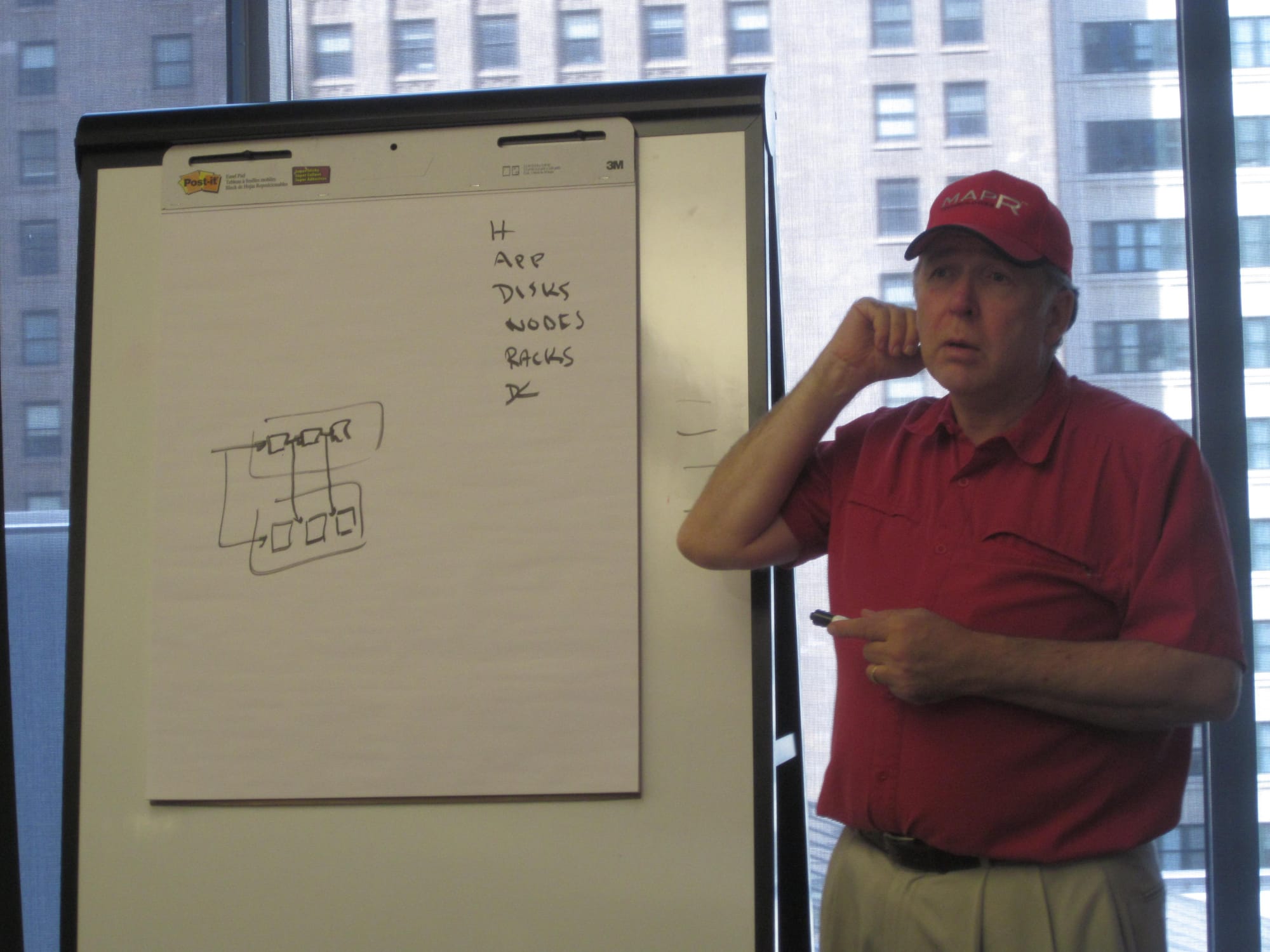

According to Ted, community Hadoop does not do a lot of drives well. MapR Hadoop gets around these issues. There is a power limit for each rack, so with community Hadoop you can only partially fill racks, wasting money. MapR Hadoop can make use of 4U servers.

Dell, HP etc – everyone – offers 1U and 2U servers. 4U 20x2tb is offered by several, and 4U 36x2tb is only offered by one vendor now. Relative cost for 1U, 2U, and 4U is about $4k, $6k, and $12k, respectively. The last, 4U, is the most cost effective.

Community Hadoop gets bottled at CPU level, not the controller level, so more disk space is needed for community Hadoop. Each step of merging needs to go to disk, because Hadoop has to deal with failures, so I/O speed makes a huge difference. With Hadoop, data is typically triplicated.

Hadoop can only do about 400mb/sec on 1gb/sec interface, and about 720mb/sec with 100gb/sec interface. Co-occurrence accounting. Most machine learning is about counting. Switches are expensive, about $4k each. MapR has broken some switches recently. Ted trusts Cisco switches the most.

Aggregration jobs are very common, with 90% to 95% of activity associated with local reads. With virtualization, Ted cares first about what is gained rather than what it costs. Ted commented that since he sees no advantages in using virtualization for Hadoop, he does not care about the costs, and joked that not having sys admins "pissed at you" is a benefit that often outweighs costs.

Between 4gb and 8gb per core of memory is recommended - this does not vary much. Ted recounted someone commenting in a conversation once that he did not care about losing data in Hadoop, and he "got demolished" by the others attending the meeting.

After Ted commented that "Hadoop is not real time", one attendee of this session mentioned that disaster recovery in Europe and the UK is a requirement. Ted followed up by remarking that human error or human error by proxy (the application) is usually the issue.

The attendee joked that from a US perspective, if there is a nuclear attack or some other disaster, who really cares about the data? Ted mentioned that it is fairly standard to send the same data to two data centers. Then the processing can start on one data center, and mirrored on the other data center. Ad hoc work can be performed at one data center, but in light of disaster, ad hoc work would stop. The serious cost is resynchronization of data.

Auditorium #1 – Intro to Hadoop & R & Big Data

A raise of hands indicated that session attendees were split about evenly across beginner, intermediate, and advanced users of the R language. The first moderator of this session mentioned Google's notice that both the best and worst aspects of R is that it is a language built by statisticians for statisticians.

Bell Labs gave us the S language, and R arose from S. S ended up getting licensed out by Bell Labs as S+, but it failed. The rise of R is a case where an open source technology outperformed the commercial version of the product.

R came out of a version created in Ausralia for the Mac, and adoption grew from there. R really lives by having its data in memory, so when it comes to big data, you need to have a plan.

R is open source, free, and extensible. What makes R popular is CRAN, which forces documentation, QA checks etc. There are now about 3,600 packages that can be plugged into R core. About 10 years ago there were less than 100 available packages.

One of the session moderators noted that they are associated with the "finance" and "high performance" packages, having authored about a dozen of them. While big data falls into this latter category, the "high performance" packages have broader scope than just big data.

After it was noted that R needs GCC, I mentioned some of the development efforts I have been investigating of late, such as Renjin, which is built on top of the JVM. The session moderator mentioned that there have been many efforts over the years to work on similar projects – porting to different platforms – but they never seem to get 100% complete, so he had no opinion but admitted that he does not follow any of these efforts closely.

The R language is single threaded, and according to the session moderator "this will not change". Following up on this discussion, he mentioned that he likes to combine C++ and R with his development efforts.

The size of the R core engine is not really big, so installing it on multiple nodes is not a bad thing to do. R is pushing 40 years, so it is impressive that it is keeping up.

The second moderator of this session (the author of Parallel R, who goes by the name "Q", pictured to the right in the above photo) declared that Hadoop does not really do anything new. Cluster computing has been around a long time. However, Hadoop is friendly and talks to anything – Java APIs, C++ APIs etc – which is one reason it became so popular.

Hadoop is a general toolkit that you can pretty much use for anything. R is not good with big data, which is why working with Hadoop is such a natural match. The gist of MapReduce is that it operates on the principle of divide and conquer. Taking data and breaking it down into manageable chunks.

What makes MapReduce so powerful is that all of the mapping and all of the reducing can be handled in parallel. One good application of blending Hadoop and R is when certain calculations need to be performed over and over. Unfortunately, I missed what the moderator said about the second application.

If you do not have a problem that falls in one of these two categories, it does not make sense to use Hadoop, and you will waste a lot of money.

An R package called Segue was written by someone who did a lot of financial models (J.D. Long). Segue sends data to the cloud, which may be a big drawback for some. An RHadoop package called rmr permits the running of MapReduce jobs from R. The biggest drawback with this package is that you need to understand both R and MapReduce.

According to the session moderator, Hadoop Streaming is "complicated and not suitable for exploratory work". (Note that I stumbled upon a news release in this space involving a partnership between MapR Technologies and Informatica while looking for this link.) One of the final notes made was that MapReduce may not work for you if you need to have everything in memory, because the first thing MapReduce does it split up your job.

Auditorium #1 – Mega Learning w/ Mahout (esp K-NN)

Ted started with a story. He had a customer who thought that the k-nearest neighbor (KNN) model might work for them, so they ran a test than ran 3,000 queries against 50,000 reference accounts. But their results were 1,000,000 times too slow. Ted worked on some rapid development with the customer, resulting in 2 times too slow.

They used Mahout to get these results. Mahout is an Apache project that enables scalable learning. Ted explained that you can make a new type of matrix on the cuff, which is something difficult to do with most math libraries. The approximate KNN search that was created can perform about 2m queries in an hour or so.

Mahout does 3 things: (1) performs recommendations with mature production quality (AOL, Amazon etc use it, and a company is currently attempting to commercialize its functionality), (2) performs supervised learning and classification – you know a bunch of answers, and it is replicated (with 100k training data use R, and with 100m training data Mahout is very good, but 1m is borderline), and (3) provides clustering.

According to Ted, Mahout is very fast for what it does. It provides a collections library that is not GPL, and is "a very active project, a warm, welcoming community". Ted is a committer on the project, and one of the attendees of this session commented that Ted responds very quickly on the Mahout distribution list.

Unlike Mahout, which uses a single pass algorithm, "Hadoop is like molasses starting a program" because it uses 3 passes. A k-means is done to solve KNN. What makes it fast is the KNN that is performed within the k-means. A cluster is a surrogate to the probability distribution.

Ted mentioned that most of Mahout works without Hadoop, although some parts need it. One of the session attendees asked why Mahout does not perform a specific function (they were hard to understand), and Ted said that it was a good idea and asked that they submit to JIRA so that it can be implemented.

Auditorium #1 – HBase for Realtime

Norm Hanson, team lead at Dotomi, started this session by discussing some of the work with which he is currently involved at work (he shared a portion of this session with me and a couple other attendees earlier in the day during lunch).

Norm is seeking to replace a "super high availability" OLTP database. PostgreSQL is currently being used. The data is key-value based so the database is using a single index path. But the business, and therefore the data, is growing. And they cannot take the database offline to grow the data store. He surmised that they need "some type of horizontal scaling mechanism".

Last September, they had to buy more hardware and then redistribute the data, creating new data partitions etc. He wants technology that is horizontally scalable out-of-the-box. One of the session attendees asked why they do not just use MongoDB, and Norm joked, motioning to Ted, that "the MapR guys are trying to sell me MapR".

Norm comes from an Oracle database background, where 5-9's is fairly standard. He figured that one solution might be to break up the data into pods, where certain portions of the data are divided from each other. They already have a Cassandra cluster, but it does not scale under heavy loads.

At this point, Ted mentioned that HBase has the concept of coprocessors, and mentioned that he will talk about realtime aggregations at the next Hadoop User's Group. Essentially, MapReduce jobs can be run against HBase at very high speeds.

Norm remarked that disks always fail, and that you need to test for disaster recovery, period. He also thinks that for OLTP these technologies are going to replace the Oracles of the world, and commented that he does not want anyone running MapReduce jobs against his OLTP data store.

Ted mentioned that HBase just looks at data like BLOBs, and that community support is a good reason to go with HBase, compared to other technologies. He has seen places where MongoDB is the right way to go, but just for small scale efforts usually associated with startups that need a good out-of-the-box experience.

According to Ted, Cassandra is "absolutely right" for Netflix, which has serious constraints on partitioning. Cassandra keeps track of a lot of noncritical data, such as the last spot in a movie that is being watched. HBase has a strong consistency model, which makes it bad for partitioning.

HBase insert rate is high. There is no order of magnitude difference in terms of speed between HBase, MongoDB, and Cassandra. HBase usually has strong service agreements. Some load isolation is needed. You can do tricks with HBase that you cannot do with the others. One reason for this is that you can load 30m rows per second with HBase. Ted sited an example of one company that completely rebuilds its database on a daily basis.

Do not perform joins or multirow updates with HBase. A high availability HBase name node is a must. CDH4 and MapR have high availability name nodes. Many-to-many relationships may be the wrong match for HBase. HBase is not a relational database, so you can "do outrageous things". HBase has the concept of a multiget with a regular expression, returning all rows back with one read.

Ted emphasized that the key design decision here is the fat table versus the skinny table. The skinny table, for example, has one row per person, person ID. The fat table has one column for everything – person, product, etc. Norm commented that he is considering use of Hive to import to Green Plumb.